Kaldi on AWS

General Notes

- Nice intro to Amazon’s EC2 services

- Use

tmux: nice for running a model in background after exitingssh - Install

emacsorvim: you’re going to need some kind of text editor which works well in the terminal. - Installing Kaldi on

t2.microwill run out of RAM and crash, so you’re going to have to pay.

Set up Instance

Choose Instance

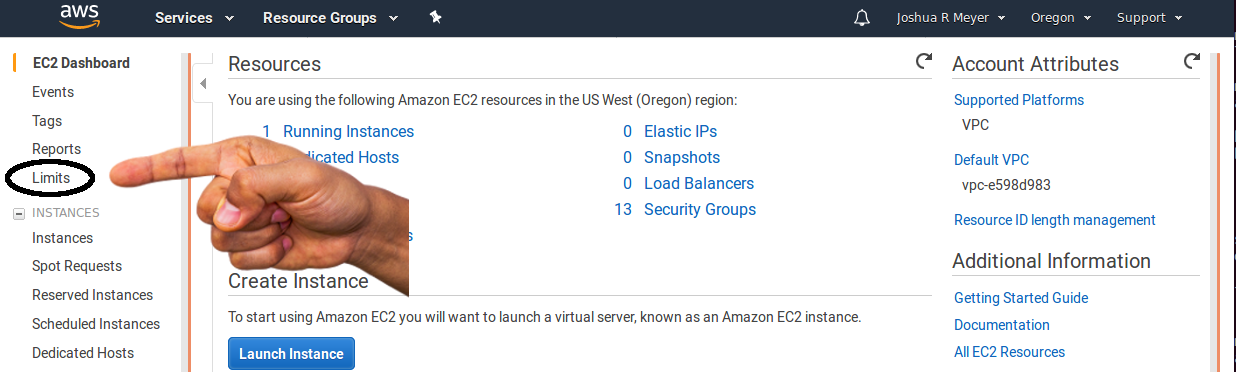

You can only launch instances which you have permissions for, find the list of available instances here:

Instance Info

https://aws.amazon.com/ec2/instance-types/

Instance Pricing

https://aws.amazon.com/ec2/pricing/on-demand/

My Set-up

I’ve been working on the following set-up, so it should work for you as well.

- operating system:

Ubuntu Server 16.04 LTS - instance type:

g2.2xlarge

The instance type I’m using is for GPUs, which is probably what you want… especially if you’re using nnet3. It’s more expensive than using a CPU, but if you’re running lots of data or experiments, you will want GPUs. Each iteration in DNN training takes a lot less time on a GPU.

If you really want to use a CPU system, I’d recommend c4.xlarge. Actually, if you’re only running GMMs, then more CPUs is better than a single GPU, since GMMs don’t train on GPUs, and it’s easier to parallelize GMMs so you get more bang for your buck with a bunch of CPUs.

Don’t forget: a standard, compiled Kaldi will take up to 15 gigs of disk space, so make sure you allocate it on the instance when you’re setting it up (on the Storage step). This is why you should also really use ./configure --shared below, it will shave off some gigs.

Download Key Pair

Generate a key-pair, and make it secure with chmod (this is a necessary step).

Don’t lose this file, or you won’t be able to get back into your instance!

chmod 400 your-key-pair.pemSSH into instance

Now use your key-pair and ssh into your instance.

ssh -i "your-key-pair.pem" ubuntu@ec2-11-222-33-444.us-west-2.compute.amazonaws.com

Update and Upgrade Ubuntu

This is necessary to install Kaldi successfully.

sudo apt-get update; sudo apt-get upgradeIf you’ve gotten to this point without any hiccups, you should have a working Ubuntu installation on your AWS instance! Now, let’s quickly download and compile Kaldi on our new instance:)

Install Dependencies

sudo apt-get install g++ make automake autoconf python zlib1g-dev make automake autoconf libtool subversion libatlas3-base sox bc emacs24 tmux

Install CUDA

I got these steps from this good post on askubuntu. If you have issues, that post is more detailed, check it out. Normally, though, these steps should work.

First download CUDA from NVIDIA. You might need a different link here depending on when you read this.

sudo wget https://developer.nvidia.com/compute/cuda/9.0/Prod/local_installers/cuda_9.0.176_384.81_linux-runInstall CUDA:

sudo sh cuda_9.0.176_384.81_linux-run --overrideAdd CUDA to your PATH variable:

echo "export PATH=$PATH:/usr/local/cuda-9.0/bin" >> ~/.profile

source ~/.profileTake a look at your install:

nvcc --versionOnce you’re training, you can look at GPU usage (like top for CPUs) like this:

watch nvidia-smi

Install Kaldi on Instance

The following is a list of commands, assuming you’ve already had experience installing Kaldi. However, if you’re interested, here’s a thorough walk-through on installing Kaldi.

Clone Kaldi

git clone https://github.com/kaldi-asr/kaldi.gitCompile 3rd Party Tools

ubuntu@ip-172-31-35-58:~/kaldi/tools$ make -j `nproc`

ubuntu@ip-172-31-35-58:~/kaldi/tools$ extras/install_irstlm.shCompile Kaldi Source

# use --shared to use shared vs static links... saves a TON of

# space on disk (thanks to Fran Tyers for pointing this out to me!)

ubuntu@ip-172-31-35-58:~/kaldi/src$ ./configure --shared

ubuntu@ip-172-31-35-58:~/kaldi/src$ make depend -j `nproc`

ubuntu@ip-172-31-35-58:~/kaldi/src$ make -j `nproc`At this point, if you didn’t get any ERROR messages or major WARNINGs, you should have a working installation of Kaldi in the cloud!

To make sure you’ve go everything working, go ahead and run the simple yesno demo:

Test it out!

ubuntu@ip-172-31-35-58:~/kaldi/src$ cd ../egs/yesno/s5

ubuntu@ip-172-31-35-58:~/kaldi/egs/yesno/s5$ ./run.shIf it runs as normal, you’re good to go:)

Now you can tranfer your own data to your instance and start working. You should probably check out Amazon’s EBS volumes for storing your data, but that’s another post. Here, I’m just going to show you how to transfrom data from your local computer to your new instance, and then you should be able to train models as if they were on your machine.

Transfer Data

Transfer Data from Laptop to Instace

You can easily transfer data from your local machine to your new AWS instance. Open a terminal on your machine and do something like this:

scp -i ~/Desktop/your-key-pair.pem ~/Desktop/your-local-data.tar.gz ubuntu@ec2-11-222-33-444.us-west-2.compute.amazonaws.com:~/data/Attach an EBS Volume

This is from what I gather the best way to work with data on AWS for these kinds of applications (machine learning training).

If you have your data on an EBS volume, it is logically separate from your scripts and codebase on EC2 instance. Splitting data and code is good practice, especially if you’re using git and GitHub.

My code for speech recognition experiements is in one git repo, and I can easily spin up an EC2 instance, clone my repo, and use symbolic links to my data on EBS after I’ve mounted it. This way, you can plug and play with different data sets, or code bases.

Also, git will get mad at you iff your files are too big or if your repo is too big. You should really only have text files on git.

- create EBS Volume in same availability zone (not just region!) as your EC2

- put data on EBS Volume

Then you need to:

- stop EC2 instance

- attach EBS to EC2

- mount EBS from within a ssh session on EC2

Train Models

If you want a real walk-through, check out my post on how to train a DNN in Kaldi. The following is more of a cheatsheet than anything.

The Quick and Dirty

ssh -i ~/Desktop/your-key-pair.pem ubuntu@ec2-11-222-33-444.us-west-2.compute.amazonaws.com- start new terminal session:

tmux new -s my-session - run model:

KALDI$ ./run.sh - exit terminal session without terminating:

Ctrl-b d - get back into terminal session:

tmux a -t my-session

Conclusion

I hope this was helpful!

Let me know if you have comments or suggestions and you can always leave a comment below.

Happy Kaldi-ing!